Today I’m wrapping up the 3-part series on duplicate content & SEO Highlander-style: “There can be only one.” If you missed the first two posts, Highlander SEO refers to the similarities between canonicalization and the centuries-old battle between life-force absorbing immortals popularized in a 1986 film.

We’ve covered how The Highlander is relevant to SEO (in addition to being an awesome movie), and how to find the duplicate content we must battle. Today is all about fixing duplicate content and consolidating all that glorious immortal life force … err link popularity … at a single URL for a single page of content.

Q1: How do you choose the canonical version?

A1: Ideally the canonical version will be the page that has the most link popularity. Typically it’s the version of the URL linked to from the primary navigation of a site, the one without extra parameters and tracking and other gobbledygook appended to it. It’s typically the tidiest looking URL for the simple reason that dynamic systems append extra messy looking stuff in different orders as you click around a site. Ideally if you’ve done URL rewrites or are blessed with a platform that generates clean URLs, the canonical will be a short, static URL that may have a useful keyword or two in it.

Following our example yesterday from Home Depot, the canonical URL would be http://www.homedepot.com/webapp/wcs/stores/servlet/thdsitemap_product_100088778_10053_10051 and all of the others would be canonicalized to it. (Note that the URL is most definitely not canonicalized now, so please do not use this as a positive example to emulate.) Of course I’d much prefer a shorter, rewritten URL to canonicalize to without the intervening platform-required directories: http://www.homedepot.com/p-100088778 or http://www.homedepot.com/c-garden-center/b-toro/p-100088778, but that’s a story for another time.

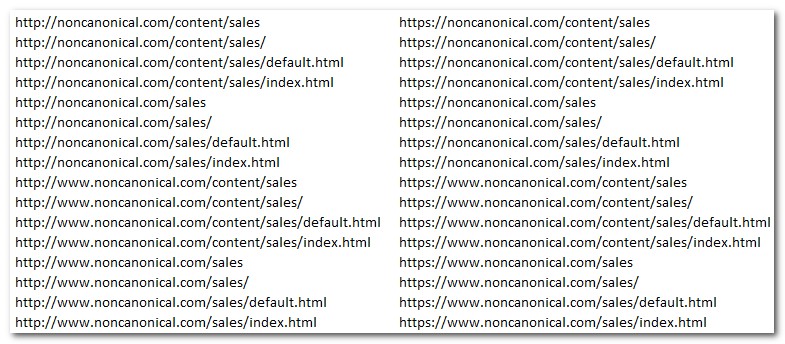

You’ll also need to choose a canonical protocol, subdomain, domain, file path, file extension, case, and … well … anything else that your server allows to load differently. For example, these 32 fictitious URLs all seem like the same page of content to humans and would load exactly the same content at different URLs.

The task sounds simple: Choose a canonical version of the URL and always link to that canonical version. For example, always link to http://www.noncanonical.com/sales/, which is canonicalized to:

- nonsecure http protocol (assuming it is nonsecure content)

- www subdomain

- noncanonical.com domain & TLD (if you own multiple domains/TLDs)

- file path without the /content/directory

- ending with a trailling slash (not without trailing slash and not a file+extenstion)

- all lowercase

- no parameters

This may look like a ridiculous example, but I just audited a network of sites with all of these (and more) sources of duplication on every site in the network. In the example above I didn’t even add case, tracking parameters, breadcrumb variations, parameter order variations or other URL variations, so this is actually a moderate example.

Can you see how the issue can multiply to hundreds of variations of a single page of content. For these duplicate URLs to exist and be indexed, at least one page is linking to each one. And each link to a duplicate URL is a lost opportunity to consolidate link popularity into a single canonical URL.

Connor MacLeod: “How do you fight such a savage?”

Ramirez: “With heart, faith and steel. In the end there can be only one.”

Q2: How do you canonicalize once you’ve chosen the canonical URL?

A2: 301 redirects. Once the canonical URL has been chosen, 301 redirects are the ideal way to canonicalize, reduce content duplication and consolidate link popularity to a single canonical URL. There are other ways, and we’ll go over them in the next section, but only a 301 redirect CONSISTENTLY does all three of these things:

- Redirect the user agent to the destination URL;

- Pass link popularity to the destination URL;

- Deindex the URL that has been 301 redirected.

For sites with widespread canonicalization issues, pattern-based 301 redirects are the best bet. For instance, regular expressions can be written to 301 redirect every instance of a URL without the www subdomain to the same URL with the www subdomain. So the 301 redirect would detect the missing www in http://noncanonical.com/sales/ and would 301 redirect it to http://www.noncanonical.com/sales/, without having to write a 301 redirect specific to those 2 exact pages. For each element that creates duplicate content, a 301 redirect would be written to canonicalize that element. So the URL http://noncanonical.com/content/sales/default.html would trigger a 301 redirect to add the www subdomain, remove the /content directory and remove the default.html file+extension.

Yes, that’s 3 redirects for one URL. But assuming you change your own navigation to link to the canonical URLs, and assuming that there aren’t a boatload of sites referring monstrous traffic to your noncanonical URLs, this won’t be a long-term issue. The 301 redirects will consolidate the link popularity to the canonical URL and prompt the engines to deindex the undesirable URLs, which in turn will mean that there are fewer and fewer referrals to them. Which means that the 301s won’t be a long-term burden to the servers.

Speaking of which, fix your linking. Links to noncanonical content on your own site will only perpetuate the duplicate content issue, and put an extra burden on your servers as they serve multiple 301s before loading the destination URL.

Q3: What if I can’t do that, are there other canonicalizing or deduplication tactics?

A3: Yes, but they’re not as effective. The other tactics to suppress or canonicalize content each lack at least one of the important benefits of 301 redirects. Again, this is very important: Only a 301 redirect CONSISTENTLY:

- Redirects the user agent to the destination URL;

- Passes link popularity to the destination URL;

- De-indexes the URL that has been 301 redirected.

Note that a 302 redirect only redirects the user agent. It does not pass link popularity or deindex the redirected URL. For some reason, 302 redirects are the default on many servers. Always check the redirects with a server header checker to be certain.

There are instances where 301 redirects are too complicated to be executed confidently, such as when legacy URLs are rewritten to a keyword-rich URL. In some cases there’s no reliable common element between the legacy URL & the rewritten URL to allow a pattern-based 301 redirect to be written.

When planning URL rewrites, always be sure you’ll be able to 301 redirect the legacy URLs. But if they’re already live and competing with the rewritten URLs and it’s too late to turn back, then all you can do is go forward. In this instance, consider 301 redirecting all legacy structures to the most appropriate category URL or the homepage.

If you can’t do that your other options for the undesirable URLs are:

- Canonical tags: The latest rage, I’ve only observed Google use the canonical tags, and only in some instances. They are a suggestion that, if Google (and eventually Yahoo & Bing) choose to follow, will pass link popularity to the canonical URL and devalue the noncanonical version of the URL. If you have difficulty executing 301 redirects because there is no pattern that can reliable detect a match between a legacy URL and a canonical URL, then it’s likely that you won’t be able to match the legacy URL and a canonical URL in a canonical tag either.

- 404 server header status: Prompts deindexation but does not pass link popularity or the user agent to the canonical URL. Once URL is deindexed, it will not be crawled again unless links to it remain.

- Meta robots noindex: Prompts deindexation (or purgatory-like snippetless indexation) but does not pass link popularity or the user agent to the canonical URL. URLs may remain indexed but snippetless, as bots will continue to crawl some pages to determine if the meta robots noindex tag is still present. May be used alone or combined with follow or nofollow conditions.

- Robots.txt disallow: The robots.txt file at the root of a domain can be used to block good bots from crawling specified content or directories. Deindexation (or purgatory-like snippetless indexation) will eventually occur over 3-12 months depending on the number of links into the disallowed content. Does not pass link popularity or the user agent to the canonical URL

- Rel=nofollow: Contrary to popular belief, the rel=nofollow attribute in the anchor tag does not prevent the crawl or indexation. It merely prevents link popularity from flowing through that one single link to that one single destination page. Does not deindex, does not pass link popularity, does not redirect the user agent.

See this duplicate content decision matrix for resolving duplicate content to learn more about which situations call for which option.

These other options are also useful when redirecting the human user is undesirable because the URL variation triggers a content change that’s valuable to human usability or business needs but not to search engines. For example, tracking parameters in URLs may not work if 301 redirected — the JavaScript doesn’t have time to fire and collect the data for the analytics package before the redirect occurs. In this instance, what’s best for SEO is bad for the ability to make data-driven business decisions. Ideally for SEO, tracking information would not be passed in the URL, so there wouldn’t be a duplicate URL to 301 redirect int he first place. But when there is, a canonical tag is the next line of defense. If that isn’t effective (and if the tracking code still can’t be changed), then the duplicate content can be deindexed with meta noindex, 404s, robots.txt disallows etc.

Keep in mind, though, that when content is suppressed instead of being canonicalized the link popularity that would flow to that canonical URL is now going nowhere. It is not being consolidated to the canonical URL to make that one single URL rank more strongly. It is just wasted. But at least the URL isn’t competing against it’s sister URLs for the same theme. The end result is kind of like The Highlander winning but losing a lot of blood. There is only one (URL) but instead of absorbing the life force of the other URLs with duplicate content, the meta noindex erases it from existence without channeling the life force back to The Highlander.

And so ends the 3-part series on Highlander-style SEO. The key thing to remember is “There can be only one!” That’s one URL for one page of content. Find the duplicate content with a crawler or log files and vanquish it with 301 redirects to consolidate its link popularity into a single, strong, canonical URL. Good luck, immortals.

Originally posted on Web PieRat.