Google’s cache view is a valuable window into how googlebot “sees” a site. I find myself stalking the cache every couple of days in an effort to untangle architectural challenges to my SEO objectives. When I’m having difficulty helping colleagues understand why content or links are or aren’t crawlable, I often take them to the cache view as a quick and easy visual. Once they see what the bots see, from the googlebot itself, the conversation around how to resolve the issue is usually much easier. I wanted to include it in my article on advanced search operators at Pratical eCommerce last week, but I hit the word count cap. So here’s the scoop on cache.

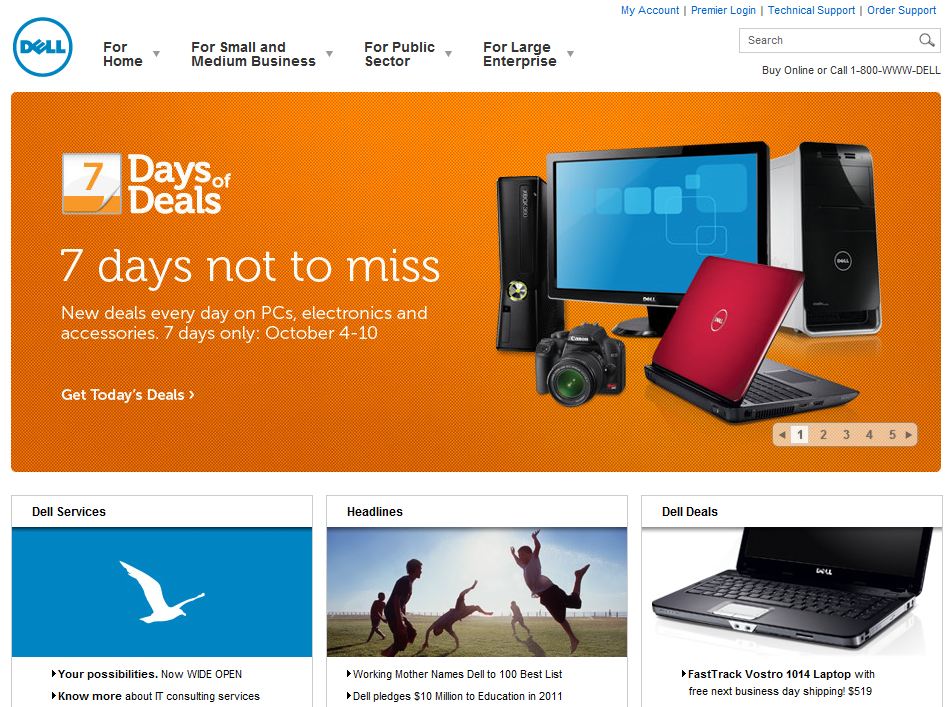

A site’s architecture and the technology choices its development team choose can make or break the bots’ ability to crawl a site. The cache view offers a quick window into the bots’-eye view. For example, most humans surfing with a modern browser that incorporates JavaScript and accepts cookies will see Dell‘s homepage like this:

As a human I am able to use the drop down menus to navigate to the main areas of the site, quickly consume many of Dell’s priority messages from the static feature boxes and the flash carousel, and browse the basic HTML links toward the bottom of the page. Dell makes its marketing priorities very clear and easy to understand… for humans with modern browsers. But what about the bots? What content can they consume? Let’s take a look at the cache view [cache:www.dell.com]:

As a human I am able to use the drop down menus to navigate to the main areas of the site, quickly consume many of Dell’s priority messages from the static feature boxes and the flash carousel, and browse the basic HTML links toward the bottom of the page. Dell makes its marketing priorities very clear and easy to understand… for humans with modern browsers. But what about the bots? What content can they consume? Let’s take a look at the cache view [cache:www.dell.com]:

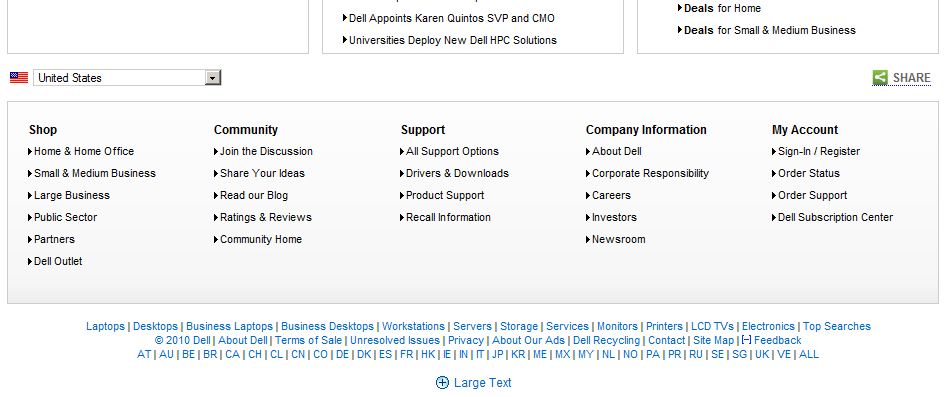

With the cache view the page looks remarkably similar. There’s a gray header at the top of the page indicating that Google last cached this page on Oct 4, 2010 18:22:07 GMT, one hour and one minute ago at the time of this article. So any changes that Dell made to the site in the last 61 minutes will not be reflected in this cache view. That’s a very important note when you’re trying to confirm the crawlability of some new architectural change — make sure the change has been cached before you start analyzing the cache view.

With the cache view the page looks remarkably similar. There’s a gray header at the top of the page indicating that Google last cached this page on Oct 4, 2010 18:22:07 GMT, one hour and one minute ago at the time of this article. So any changes that Dell made to the site in the last 61 minutes will not be reflected in this cache view. That’s a very important note when you’re trying to confirm the crawlability of some new architectural change — make sure the change has been cached before you start analyzing the cache view.

Second thing to consider is that the cache view shows a far more human-centric view of the page than I’d expect. That’s because the initial cache view is still using your modern browser to execute the Javascript, CSS and cookies that the cached page calls. To see the bots’-eye view more realistically, we need to disable those by clicking on the “Text-only version” link in the upper right corner in the gray box. Now we see:

Now we’re seeing the textual version of the site, stripped of its technical finery. The rollover navigation in the header no longer functions. The links to main categories are still crawlable as plain text links, but the homepage doesn’t pass link popularity down to the subcategory pages. Depending on the marketing value of those pages, the lack of link juice flowing there could be an issue. The next thing we see is that the big lovely flash carousel, so front-and-center for human consumption, doesn’t exist without JavaScript enabled. Assuming the pages displayed in the flash piece are valuable landing pages, which they likely are to warrant homepage coverage and development time, this again is a missed opportunity to flow link juice to important pages. Both of these issues, the navigation and the flash carousel, could be coded to degrade gracefully using CSS to provide the same crawlable text and links to bots as well as humans.

Just to be safe any issue I see in cache view (or any issue that I don’t see that I expect to see) I double check as well by manually disabling my JavaScript, CSS and cookies; and I also set my user agent to googlebot. For more detailed information on the FireFox plugins I use to do this, see Surfing Like a Search Engine Spider on Practical Ecom. Cache view is a quick way to investigate to decide if a deeper analysis is required.

Note: The cache: operator only works on Google, but Yahoo Site Explorer offers a cache link on each page in its report as well. Bing does not support the cache: operator.

Originally posted on Web PieRat.